mighty.monitor.monitor.MonitorEmbedding¶

- class mighty.monitor.monitor.MonitorEmbedding(mutual_info=None, normalize_inverse=None)[source]¶

A monitor for

mighty.trainer.TrainerEmbedding.Methods

__init__([mutual_info, normalize_inverse])advanced_monitoring([level])Sets the extent of monitoring.

batch_finished(model)Batch finished monitor callback.

batch_finished_first_epoch(model)First batch finished monitor callback.

clear()Clear out all Visdom plots.

clusters_heatmap(mean[, title, save])Cluster centers distribution heatmap.

embedding_hist(activations)Plots embedding activations histogram.

Epoch finished callback.

log(text)Logs the text.

log_model(model[, space])Logs the model.

log_self()Logs the monitor itself.

open(env_name[, offline])Opens a Visdom server.

plot_explain_input_mask(model, mask_trainer, ...)Plot the mask where the model is "looking at" to make decisions about the class label.

plot_psnr(psnr[, mode])If given, plot the Peak Signal to Noise Ratio.

register_func(func)Register a plotting function to call on the end of each epoch.

register_layer(layer, prefix)Register a layer to monitor.

reset()Reset the parameter records statistics.

update_accuracy(accuracy[, mode])Update the accuracy plot with a new value.

update_accuracy_epoch(labels_pred, ...)The callback to calculate and update the epoch accuracy from a batch of predicted and true class labels.

Update the parameters gradient norm.

Update the SNR, mean divided by std, of the model weight gradients.

Update the L1 normalized difference between the current and starting weights (before training).

update_l1_neuron_norm(l1_norm)Update the L1 neuron norm heatmap, normalized by the batch size.

update_loss(loss[, mode])Update the loss plot with a new value.

Update the mutual info.

update_pairwise_dist(mean, std)Updates L1 mean pairwise distance and intra-STD of embedding centroids.

update_sparsity(sparsity, mode)Update the L1 sparsity of the hidden layer activations.

Update the model weights histogram.

Update the SNR, mean divided by std, of the model weights.

Attributes

- Returns:

n_classes_format_ytickstep_1- advanced_monitoring(level=7)¶

Sets the extent of monitoring.

- Parameters:

- levelMonitorLevel, optional

New monitoring level to apply. * DISABLED - only basic metrics are computed (memory tolerable) * SIGNAL_TO_NOISE - track SNR of the gradients * FULL - SNR, sign flips, weight hist, weight diff Default: MonitorLevel.NORMAL

Notes

Advanced monitoring is memory consuming.

- batch_finished(model)¶

Batch finished monitor callback.

- Parameters:

- modelnn.Module

A model that has been trained the last epoch.

- batch_finished_first_epoch(model)¶

First batch finished monitor callback.

- Parameters:

- modelnn.Module

A model that has been trained the last epoch.

- clear()¶

Clear out all Visdom plots.

- clusters_heatmap(mean, title=None, save=False)[source]¶

Cluster centers distribution heatmap.

- Parameters:

- mean(C, V) torch.Tensor

The mean of C clusters (vectors of size V).

- titlestr or None, optional

An optional title to the plot. If None, set to “Embedding activations heatmap”. Default: None

- savebool, optional

Save the heatmap plot in a separate fixed window. Default: False

- embedding_hist(activations)[source]¶

Plots embedding activations histogram.

- Parameters:

- activations(N,) torch.Tensor

The averaged embedding vector.

- epoch_finished()¶

Epoch finished callback.

- property is_active¶

- Returns:

- bool

Indicator whether a Visdom server is initialized or not.

- log(text)¶

Logs the text.

- Parameters:

- textstr

Log text.

- log_model(model, space='-')¶

Logs the model.

- Parameters:

- modelnn.Module

A PyTorch model.

- spacestr, optional

A space substitution to correctly parse HTML later on. Default: ‘-’

- log_self()¶

Logs the monitor itself.

- open(env_name: str, offline=False)¶

Opens a Visdom server.

- Parameters:

- env_namestr

Environment name.

- offlinebool

Offline mode (True) or online (False).

- plot_explain_input_mask(model, mask_trainer, image, label, win_suffix='')¶

Plot the mask where the model is “looking at” to make decisions about the class label. Based on [1].

- Parameters:

- modelnn.Module

The model.

- mask_trainerMaskTrainer

The instance of

MaskTrainer.- imagetorch.Tensor

The input image to investigate and plot the mask on.

- labelint

The class label to investigate.

- win_suffixstr, optional

The unique window suffix to distinguish different scenarios. Default: ‘’

References

[1]Fong, R. C., & Vedaldi, A. (2017). Interpretable explanations of black boxes by meaningful perturbation. In Proceedings of the IEEE International Conference on Computer Vision (pp. 3429-3437).

- plot_psnr(psnr, mode='train')¶

If given, plot the Peak Signal to Noise Ratio.

Used in training autoencoders.

- Parameters:

- psnrtorch.Tensor or float

The Peak Signal to Noise Ratio scalar.

- mode{‘train’, ‘test’}, optional

The update mode. Default: ‘train’

- register_func(func)¶

Register a plotting function to call on the end of each epoch.

The func must have only one argument viz, a Visdom instance.

- Parameters:

- funccallable

User-provided plot function with one argument viz.

- register_layer(layer: Module, prefix: str)¶

Register a layer to monitor.

- Parameters:

- layernn.Module

A model layer.

- prefixstr

The layer name.

- reset()¶

Reset the parameter records statistics.

- update_accuracy(accuracy, mode='batch')¶

Update the accuracy plot with a new value.

- Parameters:

- accuracytorch.Tensor or float

Accuracy scalar.

- mode{‘batch’, ‘epoch’}, optional

The update mode. Default: ‘batch’

- update_accuracy_epoch(labels_pred, labels_true, mode)¶

The callback to calculate and update the epoch accuracy from a batch of predicted and true class labels.

- Parameters:

- labels_pred, labels_true(N,) torch.Tensor

Predicted and true class labels.

- modestr

Update mode: ‘batch’ or ‘epoch’.

- Returns:

- accuracytorch.Tensor

A scalar tensor with one value - accuracy.

- update_grad_norm()¶

Update the parameters gradient norm.

- update_gradient_signal_to_noise_ratio()¶

Update the SNR, mean divided by std, of the model weight gradients.

Similar to

Monitor.update_weight_trace_signal_to_noise_ratio()but on a smaller time scale.

- update_initial_difference()¶

Update the L1 normalized difference between the current and starting weights (before training).

- update_l1_neuron_norm(l1_norm: Tensor)[source]¶

Update the L1 neuron norm heatmap, normalized by the batch size.

Useful to explore which neurons are “dead” and which - “super active”.

- Parameters:

- l1_norm(V,) torch.Tensor

L1 norm per neuron in a hidden layer.

- update_loss(loss, mode='batch')¶

Update the loss plot with a new value.

- Parameters:

- losstorch.Tensor

Loss tensor. If None, do noting.

- mode{‘batch’, ‘epoch’}, optional

The update mode. Default: ‘batch’

- update_mutual_info()¶

Update the mutual info.

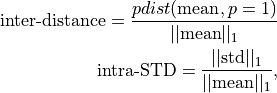

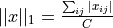

- update_pairwise_dist(mean, std)[source]¶

Updates L1 mean pairwise distance and intra-STD of embedding centroids.

where

.

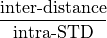

.When these two metrics equalize, the feature vectors, embeddings, become indistinguishable. We want

to be

high and

to be

high and  to keep low.

to keep low.- Parameters:

- mean, stdtorch.Tensor

Tensors of shape (C, V). The mean and standard deviation of C clusters (vectors of size V).

- Returns:

- torch.Tensor

A scalar, a proxy to signal-to-noise ratio defined as

- update_sparsity(sparsity, mode)[source]¶

Update the L1 sparsity of the hidden layer activations.

- Parameters:

- sparsitytorch.Tensor or float

Sparsity scalar.

- mode{‘train’, ‘test’}

The update mode.

- update_weight_histogram()¶

Update the model weights histogram.

- update_weight_trace_signal_to_noise_ratio()¶

Update the SNR, mean divided by std, of the model weights.

If mean / std is large, the network is confident in which direction to “move”. If mean / std is small, the network is making random walk.